Generative AI in Smart Devices Could Become Personal

Lenovo CEO Yang Yuanqing sees “personal foundation models” as the future for generative AI

Lenovo CEO Yuanqing Yang sees the next iteration of generative AI in the form of a ‘personal foundation model’ that only knows you and resides in your smart device.

“In the future, your PC may be an AI PC. Your phone may be an AI phone, and your workstation may be an AI workstation,” he said at Lenovo Tech World 2023 in Austin, Texas. The event also saw in-person appearances by Nvidia CEO Jensen Huang and AMD CEO Lisa Su to discuss partnerships with Lenovo. Microsoft CEO Satya Nadella joined virtually.

A personal foundation model would be trained on only your personal data and can answer ChatGPT-like queries that are personalized to you. It does not even need the internet to work.

CEO Yuanqing Yang

Foundation models are large machine learning models (such as 100 to 200 billion parameters) that are trained on a vast quantity of data at internet scale and can be used in a wide range of tasks (not just play chess, for example). OpenAI’s ChatGPT is built on foundation models GPT-3.5 and GPT-4.

Currently, users have a choice of two basic foundation models: Public and private foundation models. Public models like ChatGPT are accessible to all people; it is trained on public data and can do general tasks. It works well overall but it means any data you feed it goes into the public domain. Output also can be too general such that answers can be less accurate or specific.

Private models fine-tuned or trained on industry- or company-specific data are better able to answer more specific questions and do more specific tasks. They also keep internal data in-house but it is fine-tuned for a group of people – say the employees of a company. These are not personalized.

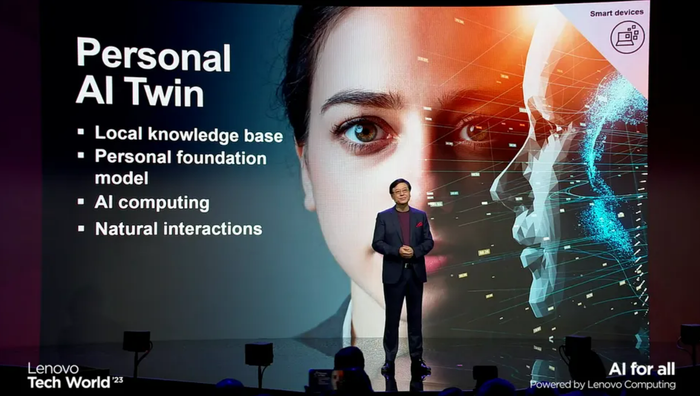

Personal foundation models narrow down the audience to one: You. It is trained on your data, meaning your personal AI can make travel plans for you and know which restaurants or activities to pick because it knows your tastes. Lenovo’s model compression technology lets the personal foundation model run on your own device.

“It becomes a digital extension of you, essentially,” Yang said. “You will now have a personal AI twin.”

His vision echoes those of Mustafa Suleyman, DeepMind co-founder who now runs Inflection AI. Suleyman also believes the next stage is a personal AI who can act as your legal proxy and purchase things for you, for example.

In June, Lenovo said it plans to invest $1 billion in AI devices, AI infrastructure and AI solutions over the next three years on top of what it already poured into the technology.

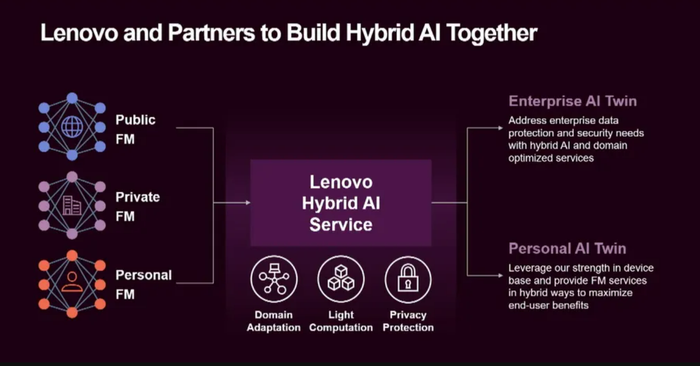

Hybrid AI Framework

The future of foundation models is a hybrid AI framework of public, private and personal models, said Yong Rui, Lenovo CTO.

CTO Yong Rui

To understand how it works, he first explained how each foundation model would function individually and then together.

It all starts with a foundation model that has not been trained on any data. Once it is trained with public domain data at internet scale, it becomes a public foundation model. Add further training on enterprise-specific data and it becomes a private foundation model that can do both general and enterprise-knowledge tasks, Rui said.

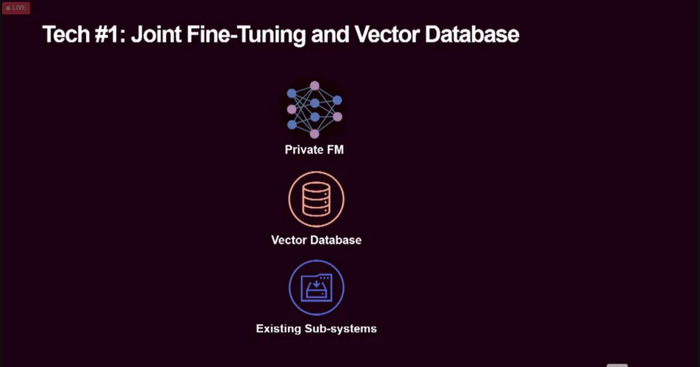

A private foundation model can understand enterprise-specific tasks. To get precise and accurate outputs, an enterprise knowledge vector database is needed. But both have to be integrated into existing subsystems such as ERP and CRM systems. The result is that the private foundation model can carry out enterprise-specific tasks end-to-end, Rui said.

To get to a personal foundation model, the large model is compressed so it can fit into a smaller device. Lenovo does this by discovering the ‘coupled structures’ within the foundation model, such as neurons and their synapses. These structures are ranked according to importance. Prioritizing the more important structures and pruning the least important can “significantly” reduce the model size while keeping “pretty good performance,” Rui said. This much smaller model will fit into a PC or smartphone.

But the ideal foundation model is a combination of all three.

Before any tasks are sent to these models to process, another module focusing on data management and privacy is needed to assess the task. Is it suitable for a public or private foundation model? If it does not have any private or proprietary information, it can be sent to any foundation model. If it has personal or company data, the task is routed to a personal model and private model, respectively.

Afterwards, the output is reassembled at the end into a complete answer.

“By combining public, private and personal foundation models we will get a powerful hybrid AI framework,” Rui said.

This article first appeared on IoT World Today's sister site, AI Business.

About the Author(s)

You May Also Like

.png?width=100&auto=webp&quality=80&disable=upscale)

.png?width=400&auto=webp&quality=80&disable=upscale)

.png?width=300&auto=webp&quality=80&disable=upscale)

.png?width=300&auto=webp&quality=80&disable=upscale)

.png?width=300&auto=webp&quality=80&disable=upscale)

.png?width=300&auto=webp&quality=80&disable=upscale)